Considerations for AI in Localization Quality Framework

When I reflect on how localization was done years ago with the traditional waterfall approach compared to today's continuous localization model, I honestly feel relieved. Particularly when I think about specific scenarios, like when I worked for EA. Every summer, the localization and QA teams faced significant challenges due to the seasonality of the game, which reflected the seasonality of football itself. Football slows down in the summer and returns in full force by September. Back then, working on a game like FIFA 15 years ago meant balancing difficult work-life dynamics (since summer, when my kids were on vacation, was my busiest work period). On the production side, a last-minute player transfer (common in football) meant a ton of work to quickly update assets and move the player from one team to another. These final project stages were extremely stressful in the waterfall model.

Everything changed dramatically with the rise of agile methodologies and continuous development, which introduced continuous localization into our workflows. This shift eliminated the feeling that all the work piled up at the end. With Agile, development started delivering functionality in two-week sprints, moving from development cycles of months to weeks. This had overwhelmingly positive effects, as issues found every sprint could be fixed quickly, and "urgent hotfixes" became less critical unless the bug was significant. The idea of "we’ll fix it in the next sprint" became the norm.

Over time, sprints became shorter, and the boundaries between creation and localization blurred. Today, some companies release localized content to their platforms multiple times daily. From a traditional LQA perspective, this presents a big challenge. In an environment where content is released daily or weekly, traditional human LQA doesn’t fit. There’s simply no time to complete development, traditional localization, and traditional LQA in one week or, worse, one day.

In these scenarios, my focus until now has been to move localization best practices earlier in the workflow (move to the left mindset!). The hope is that the number of bugs will be minimized by doing things right from the start. While this approach has some truth to it, it has always made me uneasy to release content without proper LQA.

Now, it seems we’re entering another shift with the rapid advancement of AI technology. AI feels like it could be an even more significant change than what we experienced moving from waterfall to agile methodologies. Specifically, I’ve been thinking about the scenario I couldn’t solve before: how to automate LQA when manual LQA is impossible? This is where the idea of AI post-editing frameworks becomes worth exploring.

Where AI Steps In

AI offers a promising solution to the challenges I’ve outlined. We can achieve faster, scalable, and consistent quality evaluations by leveraging AI-driven post-editing and automated quality assessment frameworks.

There are at least three important areas we can benefit from that come to mind:

Automated Checks: AI can review localized content in seconds for consistency in terminology, grammatical errors, and formatting issues.

For example, you can prompt ChatGPT to evaluate a translation against your glossary. For example, "Does the term 'Save' align with the glossary term 'Guardar'? Flag any issues with terminology consistency."

Real-Time Feedback: Instead of waiting for a complete LQA cycle, AI tools can provide instant insights into quality.

An example with ChatGPT could be that you can upload a batch of translated strings and ask ChatGPT to identify awkward phrasing or suggest corrections instantly.

Prioritization: AI can highlight high-risk issues (e.g., untranslated strings or critical mistranslations) that need immediate attention, allowing teams to focus on the most important tasks.

Here, we can ask ChatGPT to categorize issues by severity, such as critical, moderate, or minor, and prioritize fixes based on its feedback.

In this continuous development environment, AI post-editing frameworks ensure quality while keeping up with the pace of content releases. Just a few months ago, I would have considered this science fiction, and I had resigned myself to thinking it wasn’t possible. But now, with tools like ChatGPT, it feels within reach.

Considerations for Implementing AI in Quality Frameworks

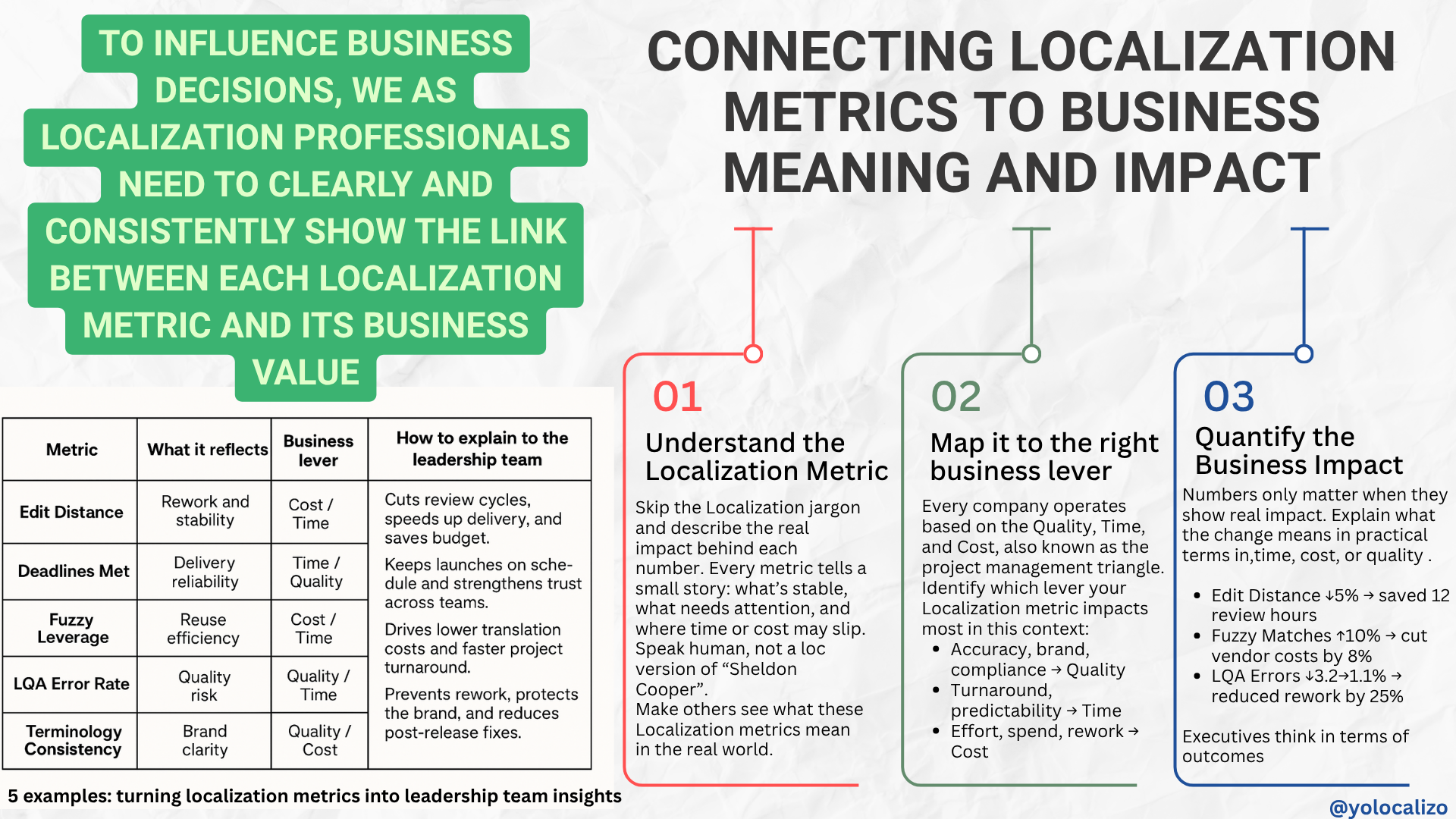

Click HERE to download the infographic

To make this vision a reality, there are 3 key factors to address:

1. Define Clear Quality Goals

AI needs to understand what "good quality" looks like. To achieve this:

Establish clear quality metrics (e.g., terminology consistency, fluency, and cultural appropriateness).

Use frameworks like MQM (Multidimensional Quality Metrics), which, due to its customization options, works well for gaming and mobile apps.

Align these metrics with the development team’s priorities (e.g., "player experience first").

A way to do this with ChatGPT would be to ask ChatGPT for help, as it can help by scoring translations against MQM parameters. For example, prompt it: "Rate this translation for fluency and terminology consistency on a scale of 1 to 5."

2. Prepare High-Quality Training Data

AI performance depends on the quality of its training data. The problem of AI hallucinations has become a significant challenge in production environments. To address this:

Provide examples of high-quality translations that match your brand voice and tone. You can consider feeding ChatGPT a glossary and a set of translations and asking it to validate terminology usage or rewrite segments to match your style better.

Include glossaries, style guides, and approved translations to set a strong baseline.

Continuously update training data based on feedback and new releases. Assign someone from the localization team to manage this task, making it part of their BAU responsibilities.

3. Build Context Awareness

AI struggles without understanding context, which has been a recurring issue for those experimenting with AI solutions. To improve context:

Train AI to evaluate localized content in its functional or visual context (e.g., UI previews for mobile apps). ChatGPT works quite well with images and recognition, so we can provide visual references or explain the functional context of the translation in the prompt, such as: "This string appears on a button in a mobile app. Check if it’s clear and appropriate in Spanish."

Include in-game or in-app references to reduce misinterpretations.

Just as we’ve said for years that "context is king" for translators, context remains fundamental for AI.

Final Thoughts

The shift to AI-driven localization quality frameworks could be as transformative as the move from waterfall to agile methodologies. If we find a way to address these 3 ideas I explored in this post, defining goals, preparing high-quality data, and ensuring context awareness, I believe we can develop frameworks to bridge the gap in scenarios where manual LQA isn’t possible. I’m optimistic about exploring how these frameworks can redefine our approach to localization quality!

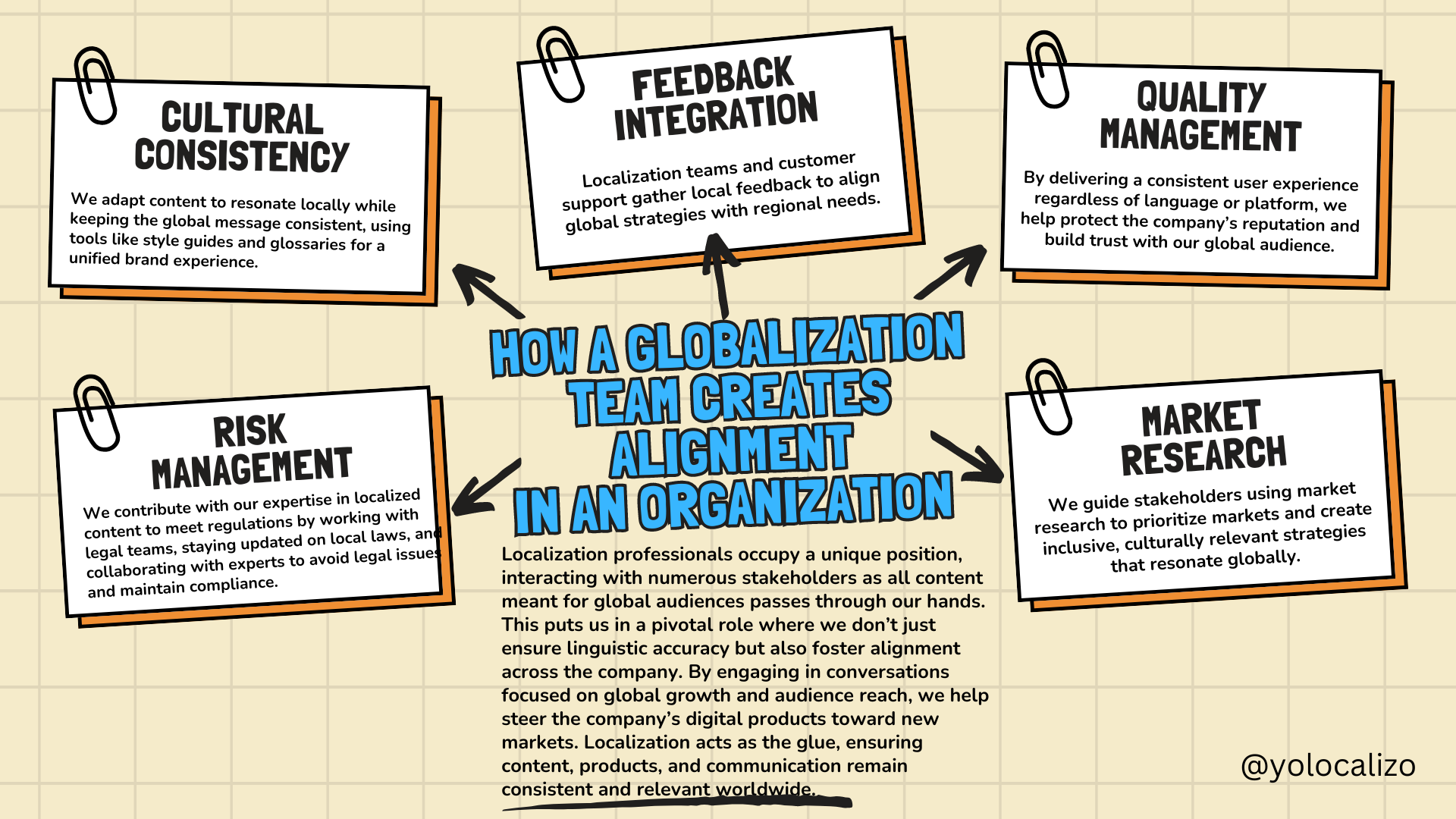

This feels like a pivotal moment. Localization teams are being asked to support more markets, move faster, use AI responsibly, and show impact, not just output. Expectations are higher than ever, but many teams are still trained mainly for execution. We are strong at delivering localization work, yet we often struggle to move from output to outcome and to clearly explain the impact of what we do.