The Localization gap and a couple of ideas to close it

One of the books that had the most impact on my life was the well-known The 7 Habits of Highly Productive People.

From time to time I reread it or look at the infographics available on Pinterest (this one, for example, is very good) to remember the key concepts.

That is why when the children's edition The 7 Habits of Happy Kids came out I did not hesitate a second to buy it for my son, especially my little one (10 years old) who has a slightly more diffuse mind ☺️

Hopefully this little one will remember the habits as he grows ☺️

We have read it several times together, it is adapted with stories for them and it is a good book that without losing the essence of the 7 Habits is adapted so that the little ones can understand it.

The book starts powerful from the beginning, Be Proactive is habit number 1, and tells the story of a Sammy Squirell who is bored, and who is complaining all the time that he is bored, and does not know what to do to entertain himself. During that parable, Sammy understands that if he is bored, it is his fault, and that Sammy has to be responsible for his own entertainment.

Let's bring this fable to our localization world.

If I have to identify an area that we have to be proactive and that we should stop complaining about, it is that the “world” (our stakeholders) does not understand what we do or how we do it when we carry out localization tasks.

Whose fault is that?

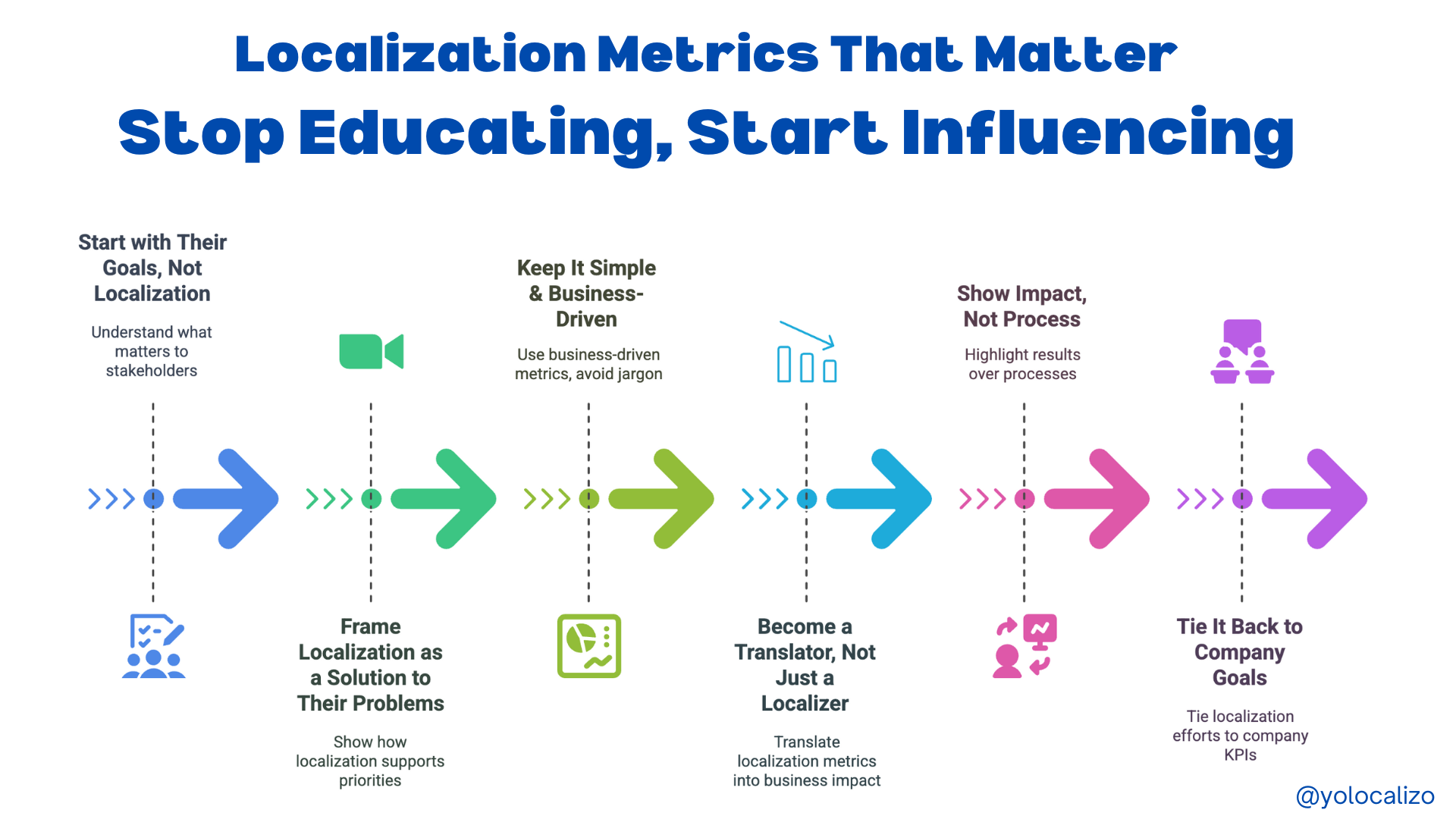

Today I want to write specifically about metrics and the gap in the metrics we track

A Localization team tends to put a lot of emphasis on Language Quality Metrics, the problem is that these metrics are not very relevant for a Product Owner or a Producer.

A dashboard with metrics showing linguistic errors is less useful to Product development teams than we would like to believe.

Of course, a Globalization team has to have these metrics, they are essential to measuring the quality we are receiving from the LSPs.

They are essential to guarantee the quality, but if we take them and copy/paste and create a slide to show a Product Owner, these metrics might look to them like “nah”😒

A Product Owner/Product team is measuring metrics like

• Retention Rate. ...

• Churn Rate. ...

• Daily Active Users (DAU) ...

• Monthly Active Users (MAU) ...

• Daily Sessions per DAU. ...

• Stickiness. ...

• Cost Per Acquisition (CPA) ...

• Lifetime Value (LTV)

None of those metrics has a direct relationship with typical Localization metrics

∑ total deliveries that are on-time or early/total deliveries

∑ total weighted errors / total word count

Passed total passed tests / total tests;

Average throughput rate ∑ weighted word count / (working days - holidays)

Fully loaded cost per Word

% missing translations

We are speaking different “languages”… .. When we see that we measure different things, it is not uncommon to understand that Localization teams feel that we are not always understood by Product teams and that Product teams do not fully understand our “-ation obsession ”(culturalization, internationalization, localization, translation…), our rare metrics and how we bring business value

Let's make as Sammy, let's be proactive, and let's think about what we can do to reach out to our stakeholders and speak the same languages.

Is it possible?

Yes

How?

We need to question what we have in common with them

And what do we have in common?

Customer Experience Satisfaction

This is what unites us

That underscore that has a badly placed in the string or a hyphen in the wrong place or a typo may go completely unnoticed by our users, or it may not! but that's what we have to measure and see if it has an impact on product metrics.

How can we do it?

Getting Language Quality Metrics is just the first step. The second step so we can fill the gap is by creating Customer Experience Metrics.

Here is an idea to do it

Customer Experience = Sentiment Analysis=Localization quality

What is sentiment analysis?

Sentiment analysis is the process of using an algorithm to categorize content based on how positive, neutral, or negative it is perceived to be.

We can perform a sentiment analysis manually if we have a small dataset, but it's time-consuming. So a better idea is to set up a system to get automated feedback.

1.- Monitoring forums, social media, and users feedback

A good place to get sentiment feedback is in the official forums of our products, or fan boards or even we can monitor what people are posting about our company/products by using third-party sites and apps.

Google Alerts is easy to set up and it’ll help us to monitor whatever we want to monitor

We can go even deeper with tools such as Yext where we can manage, measure and improve our reputation by tracking customer reviews

Putting in these systems filters to get alerts when there is a reference to “language” will help us to get end-user feedback.

Microsoft is known as one of the companies that makes the most effort to have sentiment analysis feedback as one of its keys metrics

Source: Displayr

Sentiment analysis feedback to be well done needs to be captured over a wide period and create a histogram view so we can analyze the trends over time

2. Short in-app surveys

Another good idea to get customer experience = sentiment feedback is with surveys

Just a few relevant questions to ask for feedback for our users is a great way to get direct feedback that we can link to customer experience.

From a Localization perspective, we can even ask directly to our users what we want to know.

We can even go hardcore and get one question saying something like

Will you keep using this app/service if it's not available in your native language? Yes/no

Voila! Ask that to your customer and that will be a very powerful metric of the business value that a Globalization / Team is bringing to the organization

Customers are constantly thinking of ways our products can work better for them. If we ask them, they tend to respond.

In-app surveys work really well because while our customers are using our app the survey can be prompted and their feedback will likely be very precise and to the point (and not ambiguous)

The key here is to create a very short survey because our users are in the app for a certain purpose (which is not to answer a survey) therefore it is not a great idea to throw a long survey at them.

3 questions I believe is the max we should include there to avoid survey fatigue

If you are not familiar with how to create in-app survey Intercom.io is a good tool to start with

In summary…

Users don't look at the product and say, "Wow! This translation is awesome. ”

Probably they don't even care about the translation, and that is actually a good thing as it means that the translation effort is unnoticed. Localization works as an enabler, and if the language quality is good, we are enabling the use of the app, but if

the translation doesn’t make sense, if it’s confusing or unnatural then the language is becoming an obstacle to deliver a proper customer experience.

Only then it does catch the attention of the user.

Users see the product as a whole and they don't distinguish between QA efforts, marketing efforts, coding efforts, or localization efforts, therefore we need to find a way to isolate the language contribution to the customer experience.

Our responsibility as Localization professionals is to find a way to capture the customer experience feedback with methods such as the sentiment analysis or the in-app surveys

What other strategies do you think can be used to obtain customer experience that we can somehow link to language?

How can we close the gap between the relevant metrics for a localization team vs. the relevant metrics for a development team?

Have a fab week!

@yolocalizo

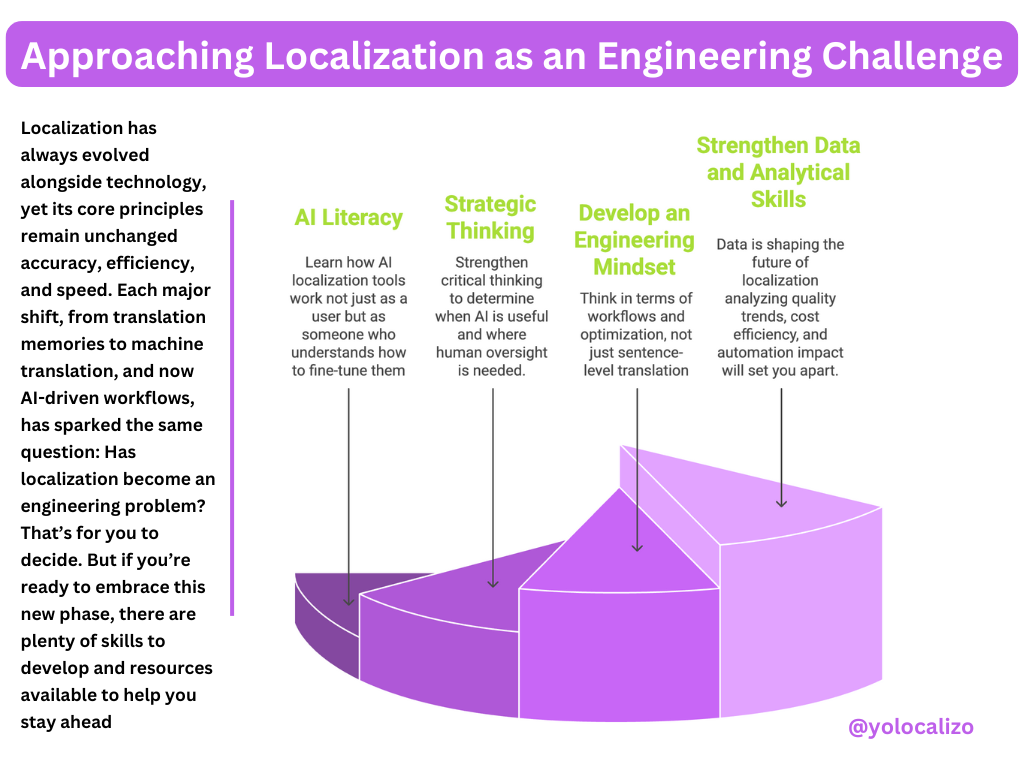

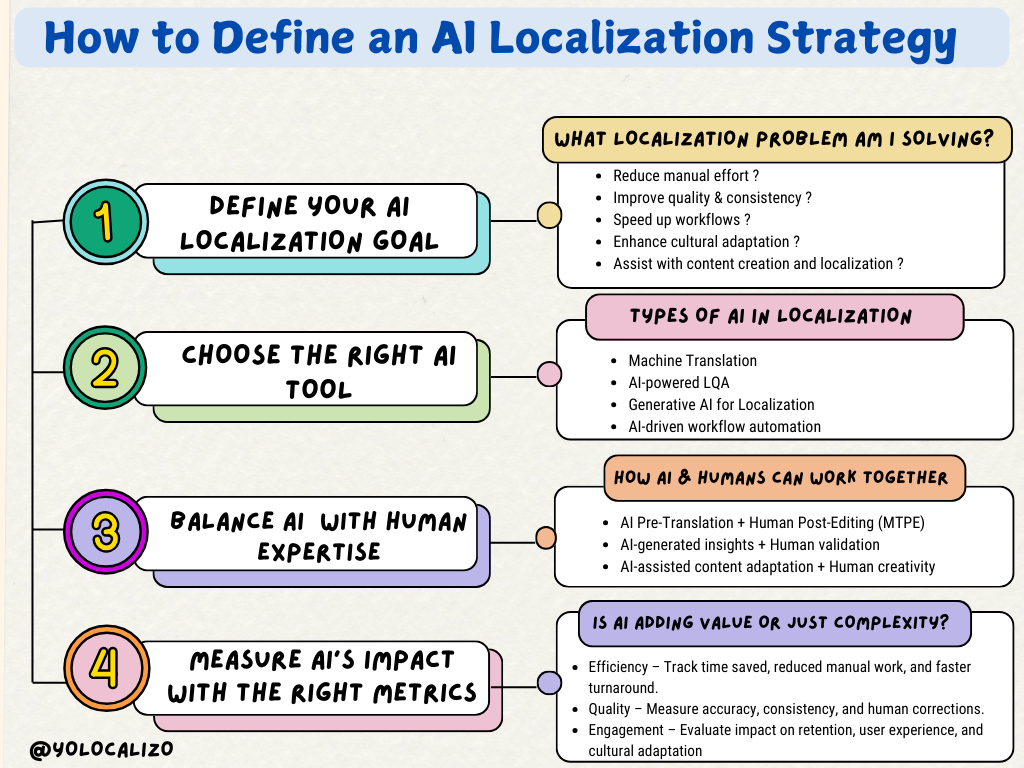

Localizability has always been a challenge small issues in source content often lead to big problems later in translation. In this post, I explore how AI is giving localization teams a powerful new way to improve source quality, reduce friction, and create better content for every market right from the start.