From frustration to precision: how Chain of Thought prompting enhances chatGPT results

A couple of weeks ago, when I finished my session at the Toastmasters club I attend nowadays, we were having some beers and tapas (the after Toastmasters session … this is my favorite part, to be honest 😊) while discussing various topics. Inevitably, the subject of AI, LLMs in general, and ChatGPT in particular came up. Given that Toastmasters is an organization dedicated to public speaking, presentations, speeches, and words, it’s natural that the ChatGPT topic would arise.

As I was talking with Salva, one of the club members with whom I’m building a lovely friendship, he mentioned that he hadn't quite gotten the hang of ChatGPT yet, as the responses he received were very generic, unreliable, and seemed almost made up.

I completely understand him.

You hear in different places that chatGPT is incredible and that it’ll forever change the world as we know it. Then, you open GPT, type a prompt, hit enter, and impatiently wait for a response. After a few seconds, frustration sets in because the response is poor. Instead of the detailed and helpful answer you expected, you get something generic, ambiguous, or outright false (hallucinations).

In the middle of that lively conversation, while eating some bravas and olives, I talked to him about hallucinations and how sometimes you need to push ChatGPT a bit to explain itself better. I’ve been playing with ChatGPT for about 1.5 years and learned some techniques. One of these techniques is Chain of Thought prompts, something I explained and encouraged my friend Salva to try, as the results we get improve significantly.

What is "Chain of Thought Prompting"?

Click HERE to doenload the image

Chain of Thought (CoT) Prompting is a technique used in generative AI models like ChatGPT to facilitate the generation of more accurate and coherent responses to prompts. It involves breaking down a question or prompt into smaller, related sub-prompts that follow a logical sequence and then using these sub-prompts to guide the AI model in generating a well-structured and informative response. This step-by-step thinking helps AI models think more like “humans,” “improving how AI solves problems.

By prompting the AI to explain its thought process, we can see how it arrived at a conclusion. I prefer this approach as it feels transparent and more accessible to trust. This approach enhances the accuracy and comprehensiveness of the answers and allows users to understand and verify the AI's logic.

For example, if you ask an AI, "If it's raining, what should I take with me?" we can get an answer like “an umbrella, a raincoat.” Still, using Chain of Thought Prompting, the AI would explain its reasoning in a precise sequence, ensuring a well-thought-out and logical response. That’s a useless example, but I hope it helps to make a point :)

Zero-Shot Chain of Thought (CoT)

Among the different CoT techniques, my favorite by far is Zero Shot. This technique is used in AI to improve the performance of models in reasoning tasks. There are many times when ChatGPT says whatever comes to mind. Sometimes, I ask it questions and think, "Where did it get that from?" We can mitigate this sensation with zero-shot.

How Does It Work?

It is basically all about prompting the AI. Instead of asking the AI for an answer, we ask it to explain its reasoning as it solves the problem. This helps the AI organize its thoughts and come up with a better answer.

Why is it Useful?

Zero Shot is helpful because it transparently explains the reasoning process of a large language model (LLM) by providing step-by-step explanations. This helps us understand how the model arrived at a conclusion, increasing our trust in its answers. It also allows us to spot any errors in the reasoning, ensuring the conclusions are based on sound logic. This transparency and clarity make the AI's responses more reliable and easier to understand.

Practical Example Applied to Localization

Imagine we want to translate a brand slogan. This is always complicated because different factors come into play depending on the market. Let's take, for example, the motto of L'Oreal shampoo, a product I use very little (or not at all 😊).

As we can see, that answer is generic; why use that one? Is it accurate? How do I know it is accurate? Well, the answer to all those doubts is to use a simple, short, but effective prompt … Explain your rationale step by step.

The Zero-Shot will trigger if we use the prompt “Explain your rationale step by step.” By doing that, we will better understand the GPT's choice or whether there are biases, hallucinations, or errors in a particular step.

Below is a summary of the different steps we get by using that prompt.

---------

**Step 1: Understanding the Original Slogan**

*Prompt:* What is the core message of "Because You're Worth It"?

*Response:* The slogan emphasizes self-worth, empowerment, and the idea that consumers deserve high-quality products because of their intrinsic value.

**Step 2: Identifying Key Concepts**

*Prompt:* What are the key concepts that need to be preserved in the translation?

*Response:* The key concepts are self-worth, empowerment, and deservingness. The slogan should make the consumer feel valued and special.

**Step 3: Finding Equivalent Expressions in Spanish**

*Prompt:* What Spanish phrases convey similar concepts of self-worth and empowerment?

*Response:* Possible phrases include "Porque tú lo mereces" (Because you deserve it) and "Porque tú lo vales" (Because you are worth it). Both expressions capture the essence of the original slogan, but we need to choose the one that aligns best with the brand's tone and impact.

**Step 4: Cultural and Linguistic Appropriateness**

*Prompt:* Which of the Spanish phrases best captures the intended emotional impact and cultural relevance?

*Response:* "Porque tú lo vales" aligns closely with the original slogan's emphasis on intrinsic value and empowerment. It directly translates the idea of worthiness in a way that resonates with Spanish-speaking audiences.

**Conclusion**

*Final Translation:* "Porque tú lo vales"

*Rationale:*

- **Preservation of Core Message:** The translation retains the core message of self-worth and empowerment.

- **Cultural Relevance:** The phrase resonates well with Spanish-speaking audiences, maintaining the emotional impact.

- **Brand Consistency:** L'Oréal's use of this translation in official materials confirms its appropriateness and effectiveness.

**Verification**

L'Oréal's global marketing strategy ensures that slogans are carefully translated to maintain their intended impact across different languages and cultures. By following these steps, we ensure that "Porque tú lo vales" accurately reflects the brand's message and connects with the target audience effectively.

---------

Conclusion

CoT prompting techniques make AI responses more accurate and clear by guiding the AI to think step-by-step. Zero-Shot Chain is beneficial because it helps the AI explain its reasoning, making its answers more trustworthy and easy to understand.

This approach is particularly effective in localization, where understanding language and culture is crucial. By asking the AI to explain its steps, we can catch and fix any mistakes, ensuring translations are spot-on and resonate with our local audiences.

@yolocalizo

Reference material

If you want to know more, please check out the following reference material, which covers the CoT topic in more detail.

Master Prompting Concepts: Chain of Thought Prompting https://promptengineering.org/master-prompting-concepts-chain-of-thought-prompting/

How Chain of Thought Prompting Improves Your ChatGPT Outputs

Let’s Think Step by Step: Advanced Reasoning in Business with Chain-of-Thought Prompting

What is chain-of-thought prompting? Examples and benefits

https://www.techtarget.com/searchenterpriseai/definition/chain-of-thought-prompting

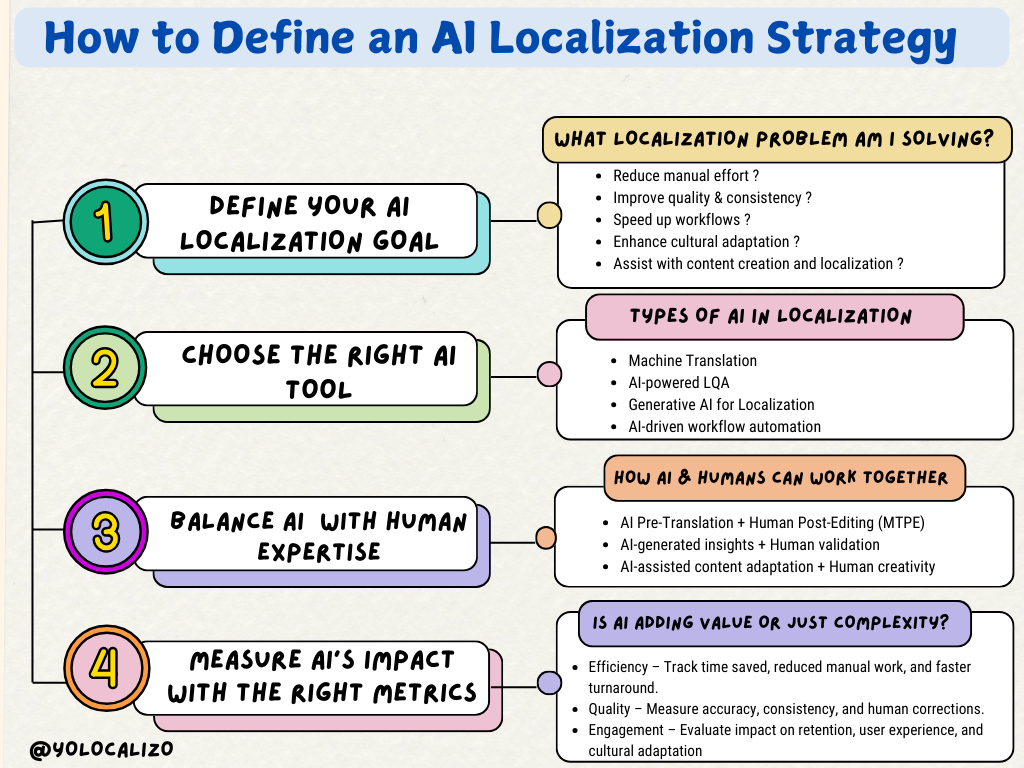

Before jumping on the AI bandwagon: What localization problem are you trying to solve? AI is everywhere right now, including in localization.

But before jumping on the bandwagon, we need to stop and ask:

Are we solving the right problem?